Limitations

When using multi-clusters, Kubernetes load balancers disallow TCP and UDP traffic on the same port, which inhibits discovery working natively for each pod. Use the following solutions to mitigate this limitation:

- Disallow discovery and use static nodes to allow only TCP traffic. This isn't an issue for load balancers or exposing nodes publicly.

- If you need to use discovery, use something such as CNI which is supported by all major cloud providers, and the cloud templates already have CNI implemented.

CNI

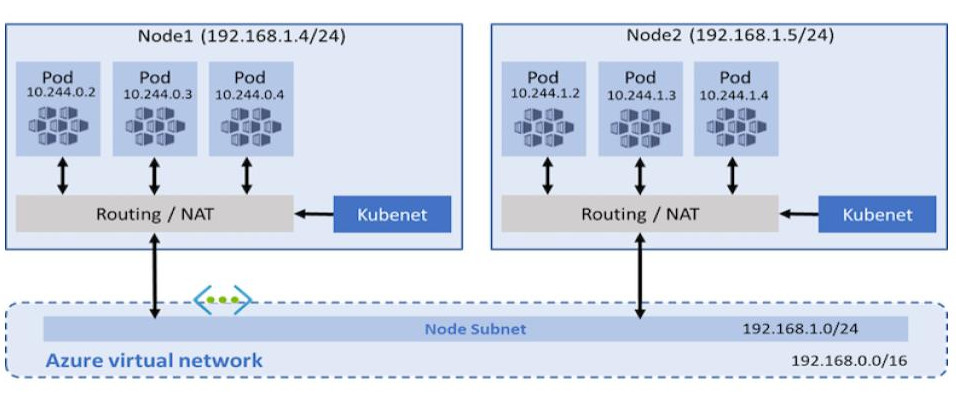

With the traditional kubenet networking mode, nodes get an IP from the virtual network subnet. Each node in turn uses NAT to configure the pods so that they reach other pods on the virtual network. This limits where they can reach but also more specifically what can reach them. For example, an external VM which must have custom routes doesn't scale well.

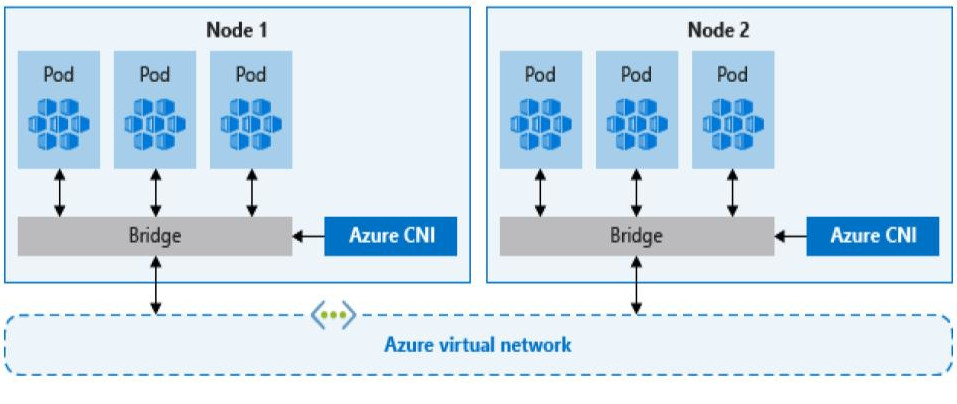

CNI, on the other hand, allows every pod to get a unique IP directly from the virtual subnet which removes this restriction. Therefore, it has a limit on the maximum number of pods that can be spun up, so you must plan ahead to avoid IP exhaustion.

Multi-cluster

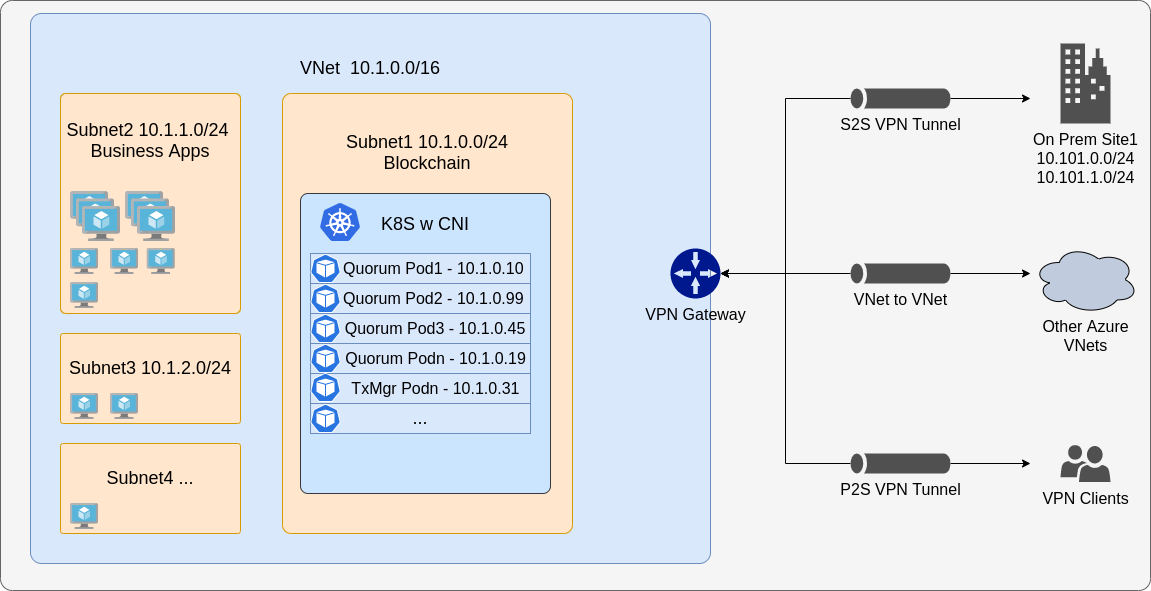

You must enable CNI to use multi-cluster, or to connect external nodes to an existing Kubernetes cluster. To connect multiple clusters, they must each have different CIDR blocks to ensure no conflicts, and the first step is to peer the VPCs or VNets together and update the route tables. From that point on you can use static nodes and pods to communicate across the cluster.

The same setup also works to connect external nodes and business applications from other infrastructure, either in the cloud or on premise.

When CNI is used, multi-cluster support is simple, but you have to cater for cross-cluster DNS names. Ideally, you want to create two separate VPCs (or VNets) and make sure they have different base CIDR blocks so that IPs don't conflict. Once done, peer the VPCs together and update the subnet route table, so they are effectively a giant single network.

When you spin up clusters use CNI and CIDR blocks to match the subnet's CIDR settings. Then deploy the genesis chart on one cluster and copy across the genesis file and static nodes config maps. Depending on your DNS settings, they might be fine as is or they might need to be actual IPs. That is, you can provision cluster B only after cluster A has GoQuorum nodes up and running. Deploy the network on cluster A, and then on cluster B. GoQuorum nodes on cluster A should work as expected, and GoQuorum nodes on cluster B should use the list of peers provided to communicate with the nodes on cluster A. Keeping the list of peers on the clusters live and up to date can be challenging, so we recommend using the cloud service provider's DNS service such as Route 53 or Azure DNS and adapting the charts to create entries for each node when it comes up.